SignIQ Lab EgoSuite

A High-Quality, Multi-Modality, Globally Scalable Egocentric Human Data Solution

SignIQ Lab Team • Dec 4, 2025

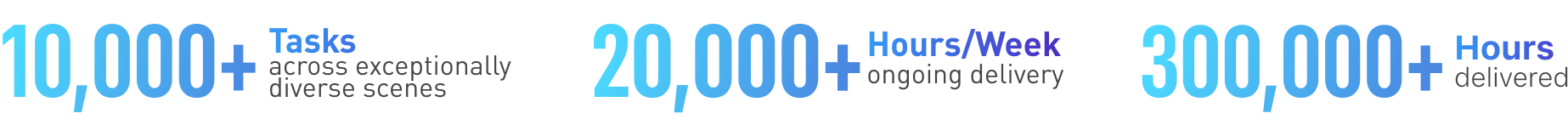

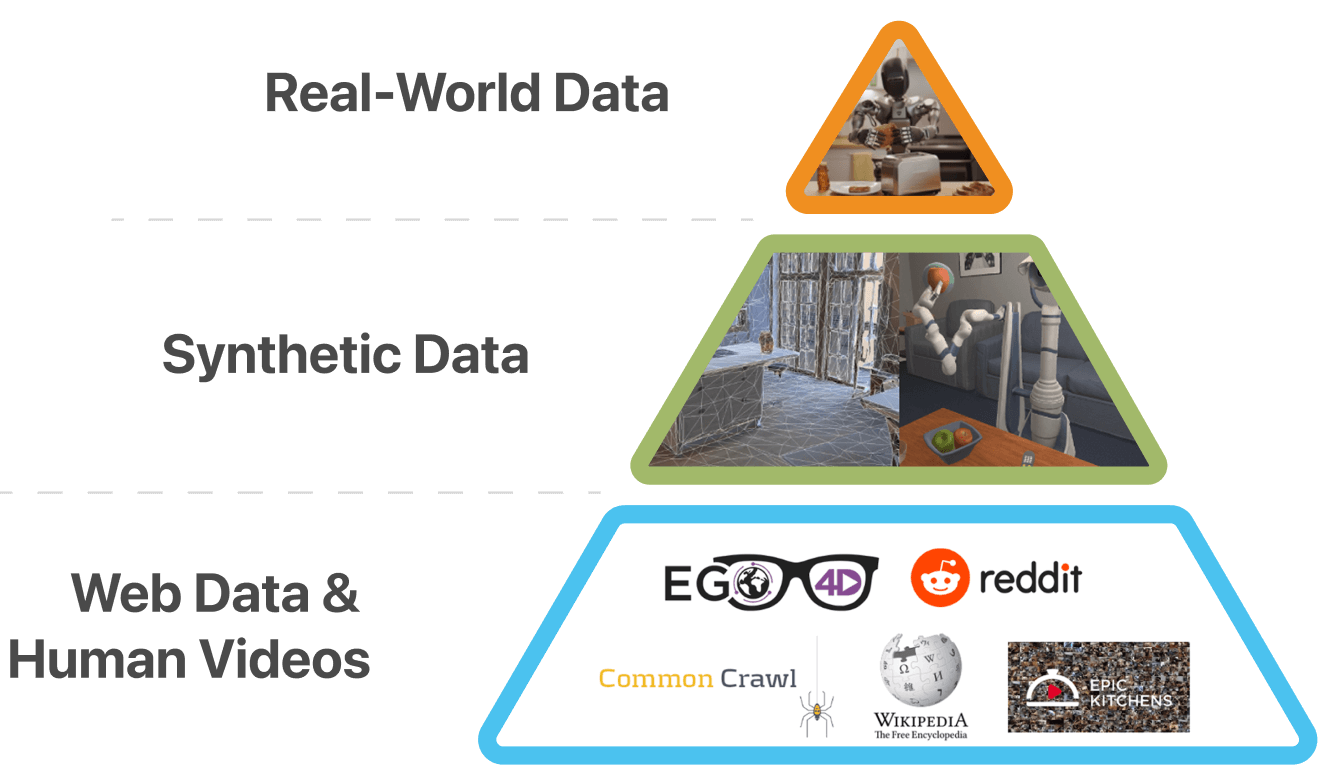

Robotics foundation models and world models are advancing at unprecedented speed, yet they all face the same fundamental constraint: the field lacks sufficient, diverse, and high-quality robot-usable data. High-quality data, such as simulation-ready (SimReady) and physically accurate assets, is critical to close the sim-to-real gap and support real-world performance in robots. SignIQ Lab approaches this challenge through the lens of the embodied AI "data pyramid", a structure that summarizes the industry’s main data sources and their inherent tradeoffs.

Figure 1. A visual “data pyramid” illustrating the landscape of robot-training data sources.

At the bottom of this pyramid lies web data and human videos, massive in scale and diversity, but fundamentally missing the first-person, contact-rich interaction signals required for manipulation and physical reasoning. At the very top sits real-world data (real-robot teleoperation data), which provides exactly those high-value action trajectories, but cannot scale: teleoperation data requires physical robot hardware, controlled lab environments, slow operator workflows, and cannot be deployed across the messy, varied real-world environments where robots must eventually operate.

This gap between “diverse-but-shallow” web data and “valuable-but-unscalable” teleoperation data has pushed the field toward a clear consensus: embodied AI needs robot-agnostic data source, the sources that capture diverse action at scale without requiring a physical robot in the loop. Within this space, two categories have become especially important.

Across industry and research, egocentric human data is emerging as a foundational requirement for embodied AI and world-model training. It represents the key data layer the field is converging toward as models grow and traditional data sources fall short.

The SignIQ Lab EgoSuite

High-Quality Annotation

Our post-processing stack transforms raw egocentric videos into high-quality, structured, and fully annotated data ready for robot learning.

Using a suite of in-house models, we automatically label upper-body and hand poses, segment human actions, and generate semantic annotations for actions, objects, and scenes. With these rich multimodal annotations, the resulting high-quality data can significantly accelerate the development and training of VLA models and world models.

Global Operations across Real Environments

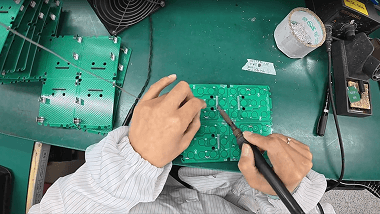

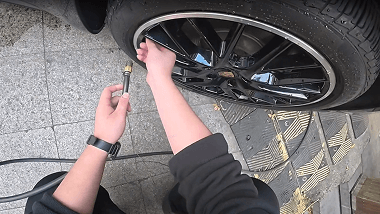

Underpinning the hardware is SignIQ Lab’s global field-operations network, built specifically for egocentric human data at industrial scale. These sessions span a wide range of real-world settings—homes and daily living spaces, commercial and service environments, manufacturing floors, logistics and warehousing sites, outdoor and field tasks, and public infrastructure. This operational footprint ensures the geographic, cultural, and task diversity required for training generalist robots and world models that must function reliably across varied, unstructured human environments.

Multi-Modality Capture Devices for Scalable Human Demonstrations

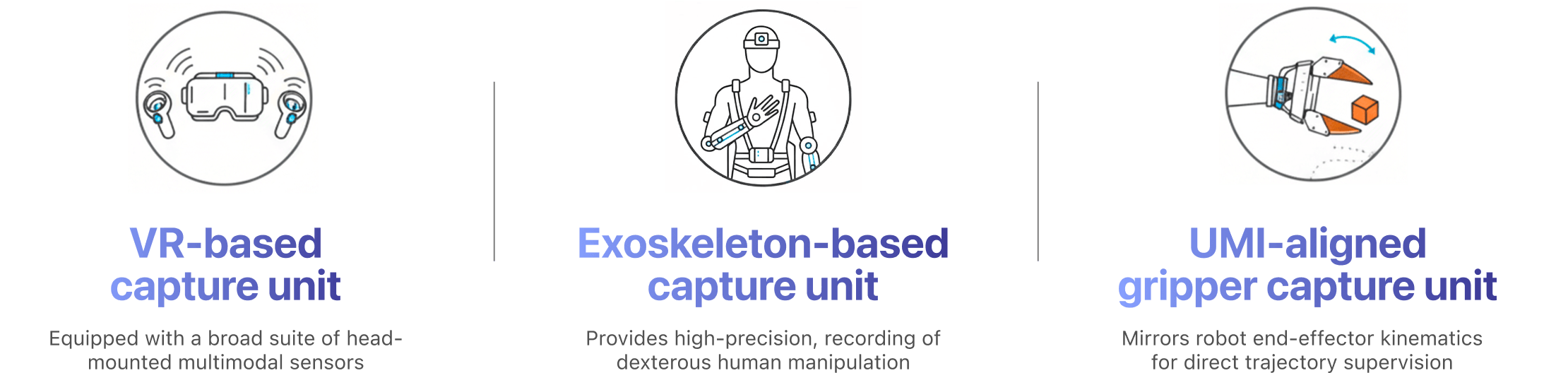

To support diverse robotic learning requirements, we operate multiple classes of robot-native capture devices: an integrated VR-based capture unit equipped with a broad suite of head-mounted multimodal-sensors; a custom exoskeleton-based capture system that provides high-precision, high-quality recording of dexterous human manipulation; and a UMI-aligned gripper interface that mirrors robot end-effector kinematics for direct trajectory supervision.

Diverse capture rigs for every scenario

Figure 2. Modality capture devices combining VR units, exoskeleton interfaces, and UMI-aligned grippers to record RGB-D, pose, tactile, and other interaction signals.

Work with SignIQ Lab

As scaling laws continue to hold for embodied AI and world models, the limiting factor is no longer model capacity, it’s the availability of dense, diverse, robot-usable data. Egocentric human data offers the most scalable path toward bridging this gap, and SignIQ Lab has already delivered over 300,000 hours of high-quality egocentric data across real homes, factories, warehouses, and public environments worldwide.

With the SignIQ Lab EgoSuite, robotics teams can access the diverse, high-quality, and large-scale dataset required to train next-generation generalist robots and world models.

SignIQ Lab is now partnering with leading teams in embodied AI, world-model development, and frontier robotics research.

To explore datasets, request a demo, or discuss custom scenarios, contact SignIQ Lab for early access.